User Research | Think Aloud | Contextual Inquiry | Speed Dating

Role: Research & Design Lead, drove the development of research guides & participant recruitment, designed visual presentation of content and prototype

Team Members: Jieyu Zhou (MS Computation Design) - Researcher & Project Manager, Rebecca Li (B.S. Information Systems) - Researcher & Quality Control

As part of the course User-Centered Research & Evaluation, I worked with a team to understand what role everyday users can play in auditing algorithmic biases on social media platforms. A pervasive issue, algorithmic bias is often promoted through the technologies everyday users engage with without even realizing due to a lack of education and channels for proper remediation. Through an array of research methods such as contextual inquiry, think aloud studies, and speed dating, our team uncovered insights about how we can support everyday auditing of algorithmic biases and prototyped initial concepts to solve these challenges in the form of design strategies focusing on education, amplification, and remediation.

Project Context

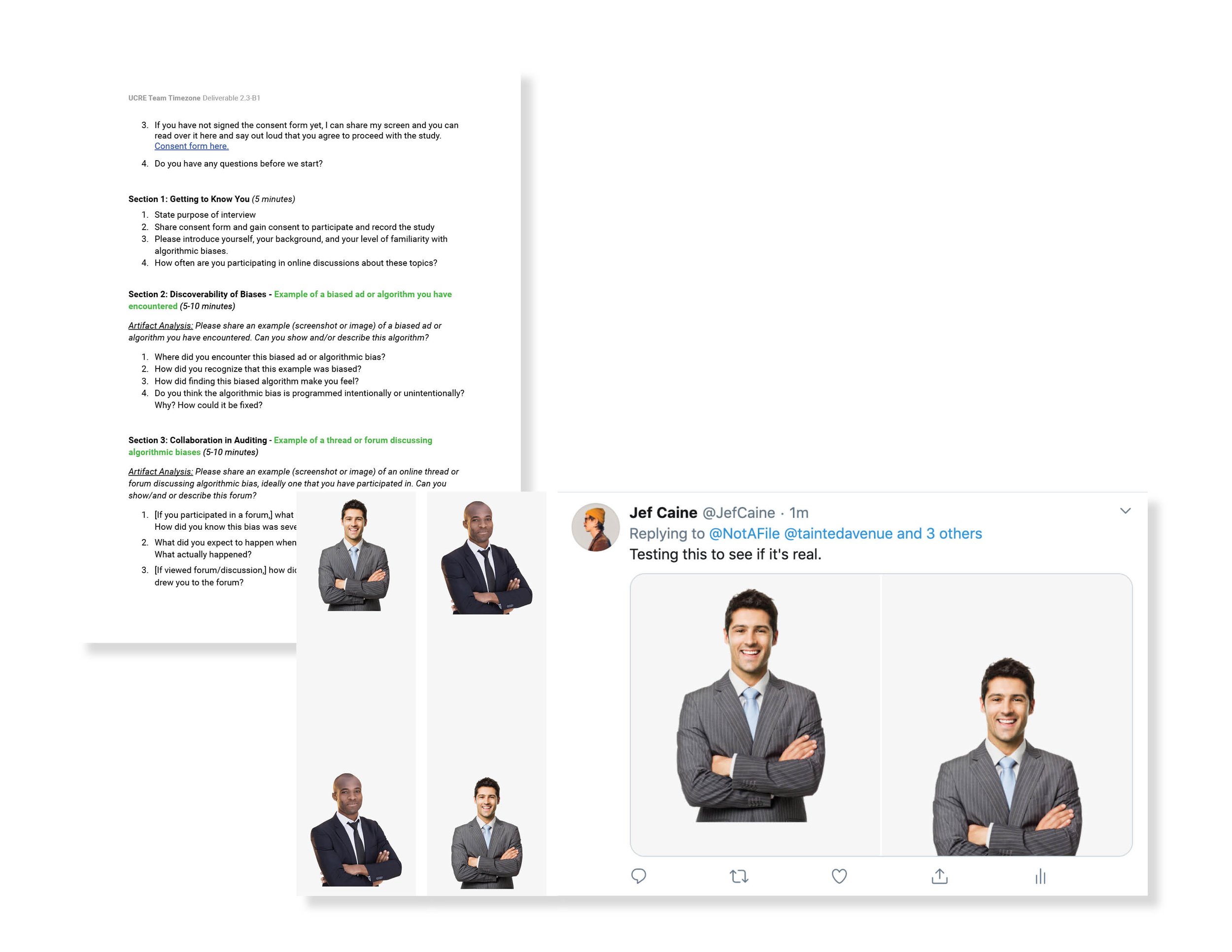

Examples of algorithmic biases on Google Images search and on Twitter’s cropping algorithms

Our project focused on the problem space of everyday auditing of algorithmic biases. Algorithmic biases are errors in technology that can be detrimental to a given population of people (ex. groups of a certain race, gender, sexuality, age, health issue, etc.). While often unintentionally created, if the data that trains the algorithm is biased. For example, if a company trains an algorithm to look for suitable candidates based on their current employee base, but their current employee base is predominantly white and male, the algorithm may become discriminatory towards people who are not white and not male.

Shown left are examples of Google Images surfacing images of white middle-aged males when a user googles “professor” style and Twitter cropping out Black faces in favor of white ones for the thumbnails of tweets.

Our team set out to mitigate these biases, which are incredibly pervasive and harmful, through supporting everyday users in identifying and auditing them.

Background Research

Pieces of data analysis from our report

Data Analysis

To dive into the problem space, our team analyzed an existing Twitter set using Python and Google Sheets. Through this analysis we learned that the top 10 tweeters were writing a large majority of tweets about bias, suggesting the burden of auditing falls largely on the shoulders of a few.

An academic article and Nature magazine article we read about algorithmic biases

Literature Review

In addition to analyzing quantitative data, we read 15 articles (both academic and informal) about algorithmic biases and everyday audits. We learned that everyday auditing is a multi-stage process and that collaboration by many users leads to more meaningful remediation of biases.

How might we foster collaboration in everyday auditing of algorithmic biases to amplify impact?

Problem Definition

Stakeholder Map

Before diving into the user research, our team aligned on a comprehensive stakeholder map of the ecosystem involving social media company employees, social media company customers (corporations that buy advertising), users/consumers who can audit biases and are harmed by biases, and bystanders. Mapping out the different connections helped us identify in which direction value was headed and which players were key in addressing algorithmic biases.

Stakeholder map for social media platforms Facebook & Instagram

User Research

Think Aloud Protocol

Think Aloud protocol and an example of an annotated screenshot of Reddit from our think aloud study showing how users consider timing in deciding whether to contribute to a forum or not

Interview Goals & Guide: The first step was conducting a think aloud study for generative research. Given the niche problem space, our team decided to conduct a think aloud study on analogous platforms such as Reddit where users “audit” other topics. We framed the guide around three key areas: 1) viewing a forum, 2) contributing to or amplifying a topic on a forum, and 3) recruiting additional users/next steps.

Recruitment: We recruited users who used online forums such as Reddit and Facebook groups regularly, especially those that contribute similarly to audits about other topics. For example, one user we interviewed was part of a Reddit forum to stop multi-level marketing schemes.

Key Takeaways: Through the think aloud studies, we had a number of key takeaways such as anonymity leads to authenticity, which is especially valuable for vulnerable topics such as biases. We also learned that posting on forums is a calculated decision where users are most inclined to post when they feel their contribution will have an impact (mostly when it is one of the first few comments on the thread).

Contextual Inquiry

A page from the contextual interview guide outlining the artifact analysis prompts; Artifacts brought to the session by a user who ran a test on Twitter with photos of a white and black business man organized in different orientations to see which Twitter would crop out

Interview Goals & Guide: After synthesizing the insights from the think aloud studies, we decided to conduct contextual interviews to speak directly with real users. Due to the spontaneous nature of auditing, it was difficult to perform a true contextual inquiry, so we employed artifact analysis and directed storytelling as contextual methods to better understand user behaviors. We asked participants to bring artifacts or examples of previous algorithmic bias audits they had participated in or withnessed.

Recruitment: For the contextual inquiry, it was critical that we speak with users who genuinely had experience in auditing algorithmic biases. To recruit these types of participants, our team reached out to Twitter users who we read about in media articles about the Twitter racial cropping algorithm audit that happened in 2020.

Key Takeaways: Through the contextual interviews, we learned that users are motivated to post about algorithmic bias by feelings of shock or surprise. They feel individual users can play a role in mitigating these biases by making noise, amplifying these messages, and educating the public about these issues, especially if users have any background knowledge or expertise in this area. Lastly, our research uncovered that audits that occur on the same platform the bias also occurred foster increased amplification.

Synthesis

After conducting primary research through think aloud studies and contextual inquiries, our team began to synthesize the data by writing thorough interpretation notes and then using affinity mapping as a way of uncovering key themes and insights. Before affinity mapping, we also conducted a walk the wall exercise where we laid out all of our previous work and noted commonalities and insights throughout.

Our affinity diagram grouped into multiple levels of insights

Ideation

Speed Dating

Sketches from the Crazy 8’s exercise and subsequent voting activity

After sifting through the insights, we highlighted the key user needs and brainstormed potential concepts and scenarios related to those needs through a Crazy 8’s exercise where we had to draw 8 sketches each in 16 minutes. Our team then voted on which needs were most salient to build into storyboards that we would use during speed dating sessions with users.

Method: For the speed dating sessions, we showed users a total of 12 storyboards (3 per each of 4 user needs) in rapid succession. Participants were then asked to respond to the concept outlined in each storyboard and asked a series of follow-up questions. Through this session, we gained feedback on initial concepts and validated user needs.

Speed Dating Sessions

Below are three storyboards related to the user need of education about algorithmic biases. Each storyboard increases in riskiness.

Key Takeaway(s): Our speed dating sessions validated our insight that users need centralized resources to learn about algorithmic biases and compiling the various content on the internet about algorithmic biases is a way to accomplish this. Additionally, these sessions further emphasized users’ desire to audit algorithmic biases in the context in which they were encountered. For example, discussing Twitter’s racial cropping algorithm on Twitter itself as opposed to a third party platform, so users could quickly test hypotheses and discuss.

Insights & Evidence

After conducting our speed dating sessions, we crystallized four key insights backed by user evidence and quotes from our user research.

Anonymity leads to more authenticity and genuine discussion while curated platforms only show good aspects of users’ lives.

Users with expertise have a responsibility to educate others while everyday users can “make enough noise” to amplify issues.

Auditing biases in the context in which they were encountered fosters more testing and collaboration.

Amplification leads to remediation by raising enough awareness to push tech companies to take action.

Evidence

Experience Prototyping

The final stage of our process was ideating around potential solutions and prototyping initial concepts. Our solution proposed design strategies that can be incorporated into different social media platforms with the implementation shown for Twitter. The strategies focus on three key areas: 1) education, 2) amplification, and 3) remediation.

Education

Tag for content pertaining to algorithmic biases as determined by artificial intelligence

Tag allows users to learn more about biases using information crowdsourced from verified experts such as those that work in tech

Amplification

When a user goes to post about a bias, a note from text analysis suggests a hashtag to link the post with similar ones, creating a centralized source for information about this bias

Can post anonymously about this as the content if it makes them feel vulnerable

If encountering a post about a bias, users are nudged to like or retweet in order to amplify the content

Remediation

When viewing centralized posts about algorithmic biases, users can click to “View Company Feedback”

See a timeline with company’s progress in addressing the issue including the different stages of remediation, estimated time with which it will be addressed, and comments from the engineers behind the algorithm

Summary

Key Learnings

While this project was for a school project, these strategies can likely be used and further studied to promote everyday auditing of algorithmic biases. Through this project process, I learned both about user research methods that I had not used before such as speed dating as well as about algorithmic biases. One of the most shocking takeaways to me in this process was how few people even know what an algorithmic bias was let alone how to report or audit one. In finding participants for our research engagements, I reached out to many peers who work in the technology industry and very few knew what an algorithmic bias is. My biggest takeaway from this project is that there is a huge gap in knowledge about algorithmic bias that exists even for those creating these technologies. It is critical that those working in the tech industry are aware of biases and can work to remedy this pervasive and detrimental issue.